Editor’s Note:

In this article we talk to Diederik Jeangout, creative partner at Fledge, who reveals how artificial intelligence (AI) drove their latest project focused on plastic pollution. Through tools such as Midjourney and Runway Gen 2, the team overcame technological challenges and leveraged creative freedom to generate amazing results. Diederik highlights the transformative potential of AI in advertising, offering an optimistic vision for the future of creativity in the digital age.

Can you explain your background and what let you to be a creative partner Fledge?

I’ve been working for about a decade as a creative in advertising agencies, handling both the copywriting and the art direction, but my love has always been in finding core concepts – the spark of an idea if you will. There’s magic in having a Word doc with hundreds of lines of random ideas until one grabs your attention, and you just know it’s the one.

I got to a point where I had done many different campaigns, had the chance to work with different teams and creative directors, all shaping me into the creative person I am today. I felt it was time to take that experience and shape a creative company of my own. My brother Roeland had a production company that was up until that point mainly focused on social content, but wanted to evolve into a full-fledged production company focused on high-level execution but also creating in-house films for good causes too.

As kids we entered a local youth art competition with a short film we’ve made, together with a buddy of Roeland, and we won. So, in hindsight it was the first taste of what we could accomplish, being very different personalities but therefore also a strong complementary duo.

Tell us a little bit about your project and how using AI was different from previous one.

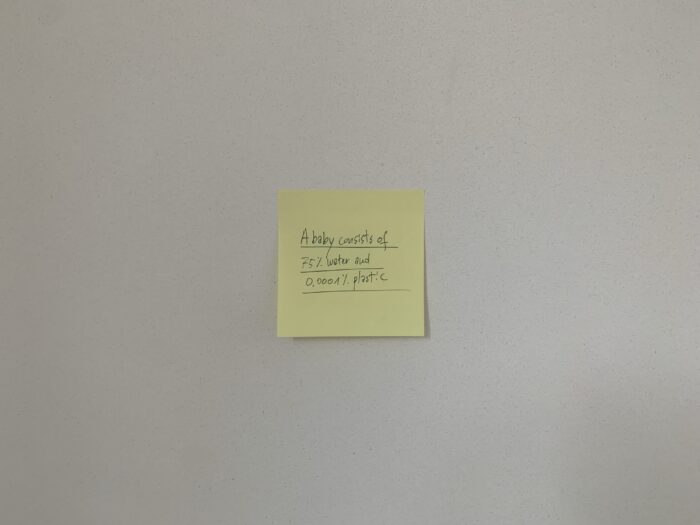

It all started out with a single post-it is hanging on my wall saying ‘A baby consists of 75% water and 0.0001% plastic’. I doubt those numbers are scientifically correct (the idea is) yet this little yellow post-it was a constant reminder of this awful fact that nanoplastics are already found in fetuses inside the womb.

I’d always envisioned this story being a vignette film that started with a more sociocritical approach but would evolve into an emotional story with the punch or reveal at the end. But it also had to be visually captivating, plastics being this weird contradiction of being horrible for the environment yet also looking colourful and taking the form of so many objects we crave and love.

Realistically it was never going to be possible to realise this film with live action footage and VFX, with the many visual ideas I had in mind. So the project got shelved for a while. And then suddenly Runway Gen 2 came out, and we knew it was only a matter of time before we could start creating this film.

Tell us about your process. How long did it take? What techniques did you use? What programs do you use?

In essence it’s an extremely simple workflow. Over the course of a few months we’ve prompted about 1300 Midjourney images, trying to find that perfect still – a thumbnail if you will. We sometimes fed unsatisfactory Midjourney results into Photoshop, trying to refine them into the ideas we wanted yet we couldn’t get Midjourney to produce. Of course, Photoshop’s AI feature came in extremely handy for this. And then we fed them back into Midjourney, until we got the images we wanted.

We then took those stills and ran them through Runway Gen 2, rendering out about 1100 clips in total. Throughout the 2-3 months we were working on this film (on and off in the evenings, weekends and the odd empty days in the schedule) we experienced how Runway Gen 2 was getting better and also how it got additional features that really helped us trying to achieve what we wanted.

In the end I used only about 46 clips in the final edit, so a lot of rushes were thrown away. We edited in Premiere Pro, adding just a few stock shots at the end because I wanted the foetus to look 100% realistic and scientifically correct.

Were there any other considerations?

The NGO we were working with, Plastic Soup Foundation, loved the concept and basic film idea and gave us pretty much 100% creative freedom to tell the story as we intended. I think that was really key in this process, working with the AI tools we had to our disposal. As the key thing in this process was to embrace the limitations, we were working with but also welcoming the unexpected surprises AI gave us.

For instance, in the opening sequence there’s a shot with a hand covered in plastic, yet it feels pretty oily as well, as if you’re seeing plastic and oil at the same time. That wasn’t the intention, but it served as a perfect transition shot going from the oil sequence towards the plastic sequence.

And of course, entering this project not knowing what to expect means you need to be comfortable throwing a lot of hours away. There was one single shot that took about 3 hours to make, from Midjourney to Photoshop and back and into Runway Gen 2, only to scrap it in the end. And it was by far the only one.

How did you go about storyboarding? Did you need to use AI? We’d love to see some initial frames.

The fact that we are a small team, and our client gave us their total trust in telling the story, meant that we didn’t use storyboards. We started out with a finished script, knowing the different chapters and first visual ideas, but we kept tons of mental space while executing the thing – everything was still possible.

We started out chronologically and once we had the first sequence finished, we started hopping back and forth between chapters in the film, based on our mood and time we had that day. (Some parts needed way more attention and work than other segments).

What was your favorite moment or the funniest part of the project?

As with any creative project there are ups and downs. Moments, you wish you never started and times your confidence in the project is peaking. For me the most exciting part was creating those very first shots and realizing ‘Wait, this idea IS going to be possible!’. And although I was sometimes battling against an AI entity that wouldn’t want to make what I wanted, I loved it when it surprised me.

Because most of my time was invested in creating the rushes, the editing didn’t take long. And as such it felt exciting, seeing all those shots coming together and the story taking shape with just a few clicks and cuts.

What’s also interesting to note is that this was a pretty lonely process, just me behind the computer with the regular feedback from Roeland. So once Chemistry Film was onboard to grade the film and Antoine Cerbère joined to do the sound if felt as a huge input in energy. Getting their versions of the film back with graded footage and sound was fantastic and the fact they were so enthusiastic about the film reignited the initial enthusiasm we had when starting this project. So a big shoutout to them!

Did you encounter any difficulties along the way? If so, how did you overcome them?

I think the main difficulty was keeping an open mind of the limitations of the technology at the time of creating the film. I’ve spent many hours stubbornly trying to create an exact image in my mind, only to scrap it in frustration after many hours of trying and many different iterations. It was good to have the Ping-Pong with Roeland, who I sent daily updates of the shots, as he was always strict in his feedback, keeping the bar high.

So, a lot of darlings were killed but in the end, I think it really benefited the film.

One thing that was a real limitation is that Runway didn’t want to give me circular motions. We really wanted to add a shot of a tire that was spinning, but there was no way to do so as Runway Gen 2’s Motion Brush’s controls didn’t give me that option. So, it took a lot of time finding an alternative for that tire shot, because it was crucial storytelling wise since up to 28% and more of microplastics come from tires that wear out. And I had to scrap the whole idea of a turning washing machine filled with clothes (synthetic textiles being washed another major cause of microplastics) since the AI tools we used didn’t want to give us that.

Noticing that many of your projects focus on social messages done with different budgets (Format/Flex) , could you tell us which previous project you would have undertaken with the help of AI?

When we can we dedicate our time and energy to causes that need attention. We are making multiple good cause films each year, but we’re always trying to overcome the budgetary challenges. As such a lot of our own concepts are created with that in mind, finding ways to creatively use stock footage as part of the idea.

There’s one project that we would’ve made 100% in AI as well, if it had existed at the time and we were familiar with the programs or technology, which is a project called ’The Rhythm Of Death’ for PETA. It’s a series of three social films, each focusing on a particular animal and showing pictures of them in rapid succession – the edit rhythm corresponding with the actual rhythm of those animals being slaughtered at this moment. An almost live representation of the animals being killed.

Those photos took a long time to source on free websites and it took even longer to edit them, trying to position the heads of the photos in the same position so it was watchable. For both the generation of those photos and also the position of the animals’ heads we could definitely use AI.

Another one is a film called ‘Everybody Influencer’, in which we show how one influencer taking a photo in a fragile ecocystem such as a poppy field can lure thousands and thousands of other people to the same spaces, ruining them because of the overflow of visitors.

We search the whole internet for actual people in poppy fields and although we sourced them from free websites we manually pixelated their faces out of respect for their identity. If AI would’ve been accesible at that point, we could’ve just generated let’s say 50 different influencers and the job would’ve been done in a tenth of the time.

Do you have any advice for students entering the field?

There are more opportunities than ever to create stories. Ignore the overwhelming aspect of it all and dive into one aspect of filmmaking. I have the tendency to watch tutorial after tutorial, trying to follow a structure or linear path towards understanding a 3D program or an AI platform or whatever. But I would say to start with the core of it all: finding the idea and the story and then discovering the shape it could take form. It’ll be much more rewarding and interesting if you can follow tutorials to craft an actual piece instead of making stuff that’s only a representation of your learning.

In short: jump and figure it out while you go.

Finally, what message would you give to the industry for 2024 regarding AI?

While we are figuring out how to protect the value of human input in these times of technological revolution there are just so many opportunities to make things more efficient, logical or budget-friendly. Whether it’s in the actual visualization of a story or behind-the-scenes during the productional process. Use the technology for its good things and trust in your own capabilities and in those of others. People will always have added value on all aspects of filmmaking but for us this opens much more opportunities. We are not fearing what’s on the horizon but excited for what it helps us to become and to create.

Discover more from reviewer4you.com

Subscribe to get the latest posts to your email.