Eight Interesting AI Papers published just before 2023 ends. Yesterday, I ventured into scaring out two cows that jumped over the fence on my plot. Despite my brisk arms movents and theatrical yells, the cows were unimpressed, and only left when they bothered. Later in the evening, unbeknownst to me, ChatGPT told me that cows can massively outrun humans, clocking 30 miles per hour in short distances!

2023 hasn’t ended yet, and the pace of AI research is not abating by any means. Sometimes, I feel like AI research is running at a wild pace, like a herd of mad cows. Here are 8 interesting papers that were published just a few weeks before the end of 2023.

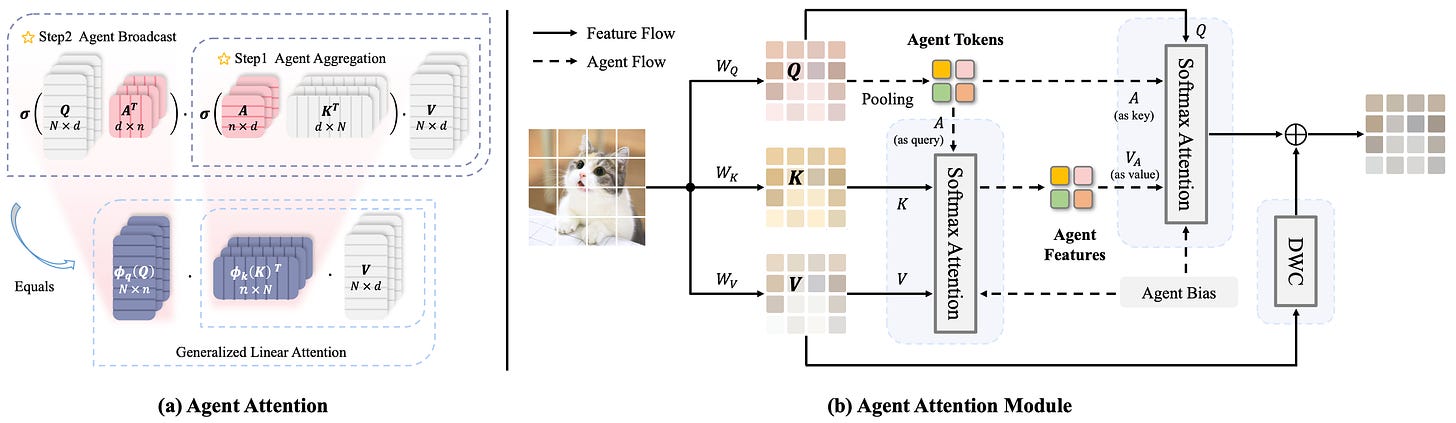

A new, effective agent attention paradigm. The Attention Mechanism is a key component in Transformers. Attention is one of the backbones of GenAI. However, Attention requires lots of expensive computation, which restricts representation in many scenarios. Researchers at Tsinghua Uni just introduced a novel attention paradigm, Agent Attention, that strikes a favorable balance between computational efficiency and representation power. Agent Attention seamlessly integrates the powerful Softmax attention and the highly efficient linear attention. Checkout paper and code: Agent Attention: On the Integration of Softmax and Linear Attention.

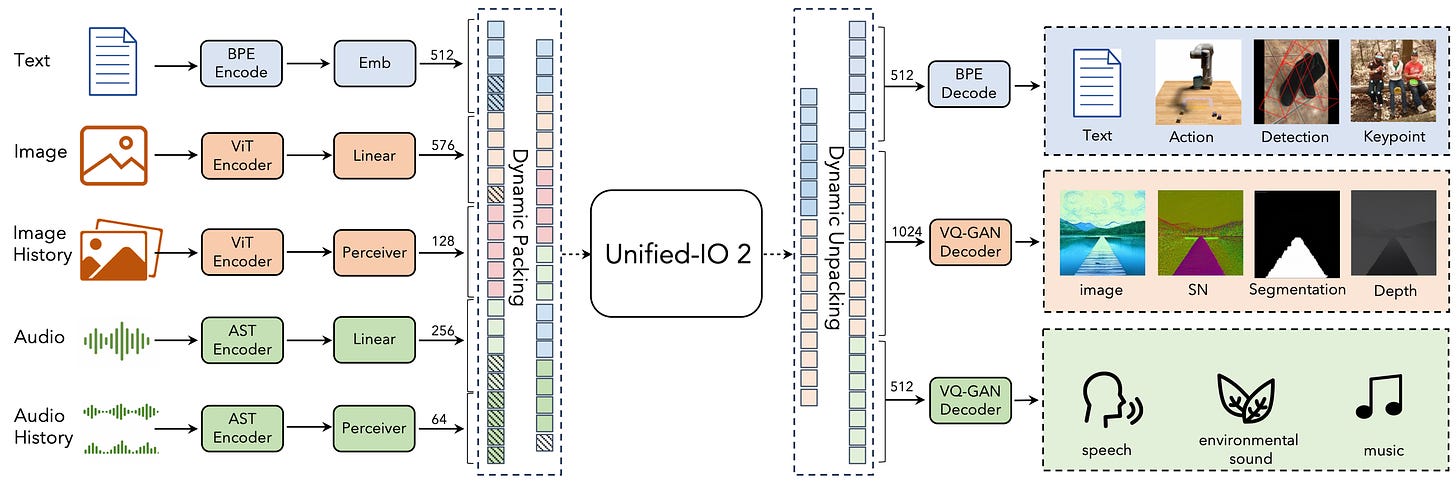

The first open-source, fully multimodal unified model. Researchers at AllenAI just presented Unified-IO 2 model, the first autoregressive multimodal model that is capable of understanding and generating image, text, audio, and action. Unified-IO 2 achieves SOTA performance in image generation and understanding, natural language understanding, video and audio understanding, and robotic manipulation on the GRIT benchmark. Checkout paper, code, examples here: Unified-IO 2: Scaling Autoregressive Multimodal Models with Vision, Language, Audio, and Action.

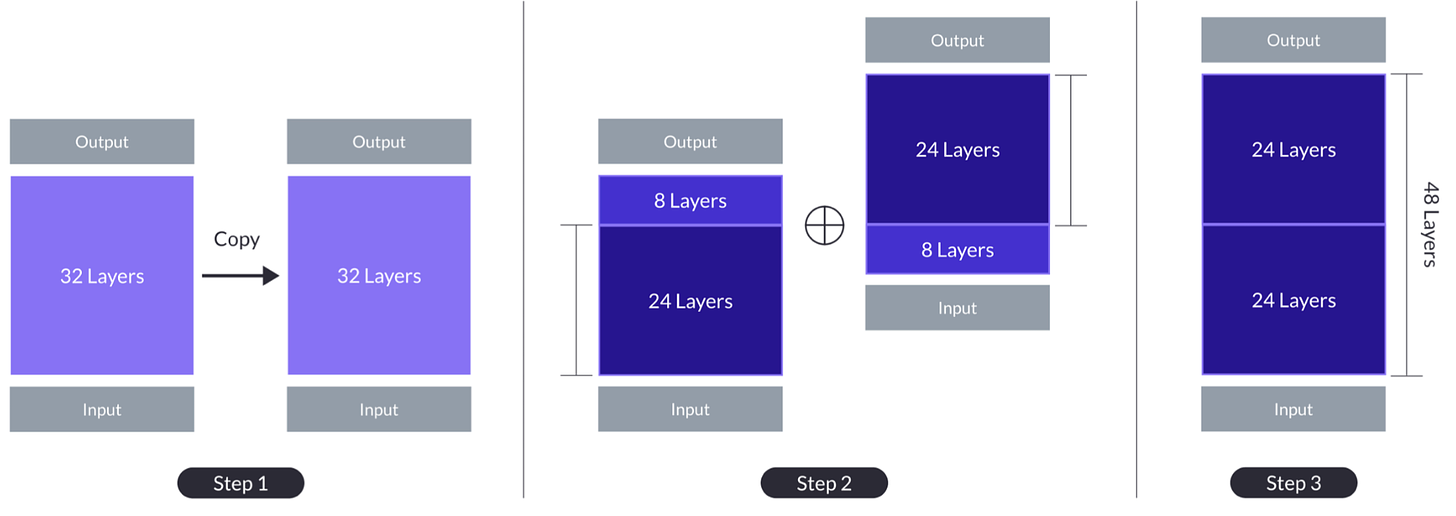

New, SOTA efficient alternative to Mixture-of-Experts model scaling. Mixture-of-Expert Models are the latest trend in LLMs. But MoEs models require lots of complex changes to train and inference, and computation. Researchers at NYU & Meta AI, just introduced depth up-scaling (DUS), a novel technique to up-scale base LLMs efficiently and effectively in a simple manner. SOLAR 10.7B outperforms existing open-source pretrained LLMs, such as Llama 2 and Mistral 7B. And SOLAR 10.7B-instruct-fine tuned surpasses Mixtral-8x7B. Paper: SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling.

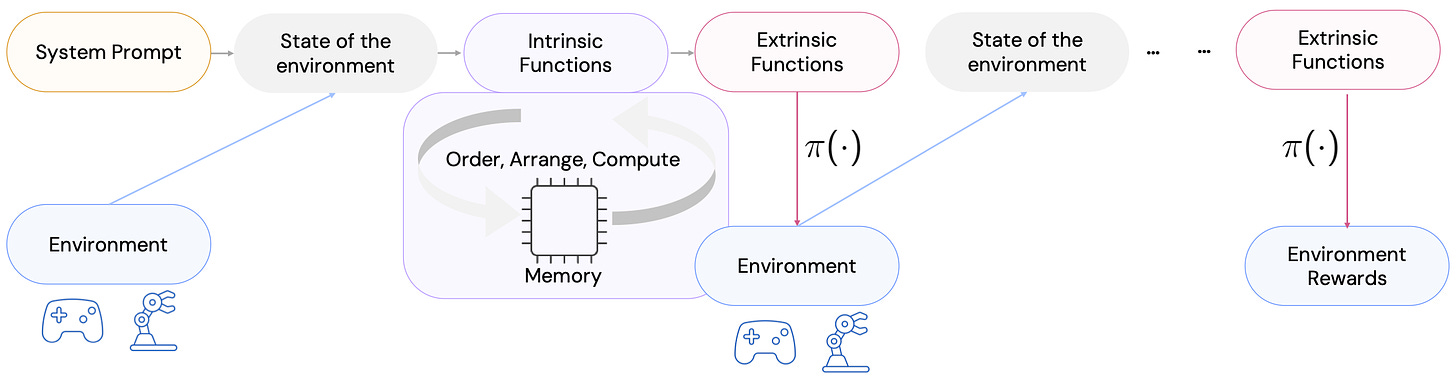

New approach on embedding structured reasoning in AI Agents. This paper presents a new general framework model for integrating and learning structured reasoning into AI agents’ policies. It provides the adaptive ability to learn models inside every module or function, consistent with the modular structure of cognitive processes. The paper shows that AI agents perform and adapt far better when organised reasoning and prior knowledge are embedded. Paper: Pangu-Agent: A Fine-Tunable Generalist Agent with Structured Reasoning.

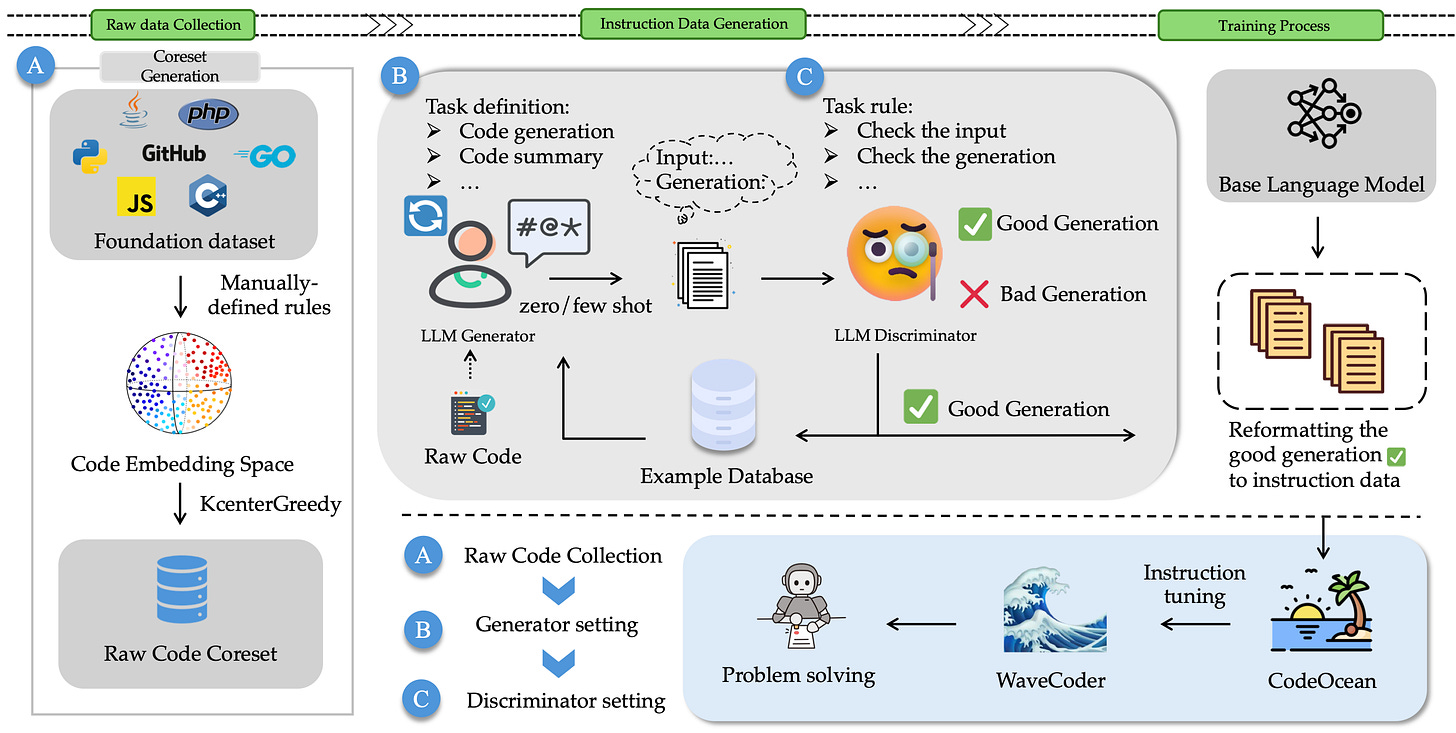

New, powerful, instruction fine-tuned coding model and dataset. Existing coding model methods for instruction data generation often produce duplicate data and are not controllable enough on data quality. MS Research just introduced WaveCoder, a fine-tuned Code LLM that is specifically designed for enhancing instruction tuning of Code Language Models (LLMs). Wavecoder models outperform other open-source models. Paper: WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation.

Three, new awesome AI surveys and 2024. OK, so it’s a wrap for AI research in 2023. Here are 3 awesome surveys you’ll enjoy:

OK, so New Year’s Eve is coming and my partner is admonishing me to shut off the bloody laptop just now. Wishing you a wealthy, heatlhy and happy 2024. Peace. Love. Friendship. Be nice to your neighbours.

-

Google Gemini vs. GPT-4V: Comparing Vision-Language Capabilities

-

AnyText: Multilingual Visual Text Gen & Editing (paper, demo, code)

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.