There are good reasons why Machine Learning has become so famous. Many businesses decide to create products by taking advantage of this opportunity.

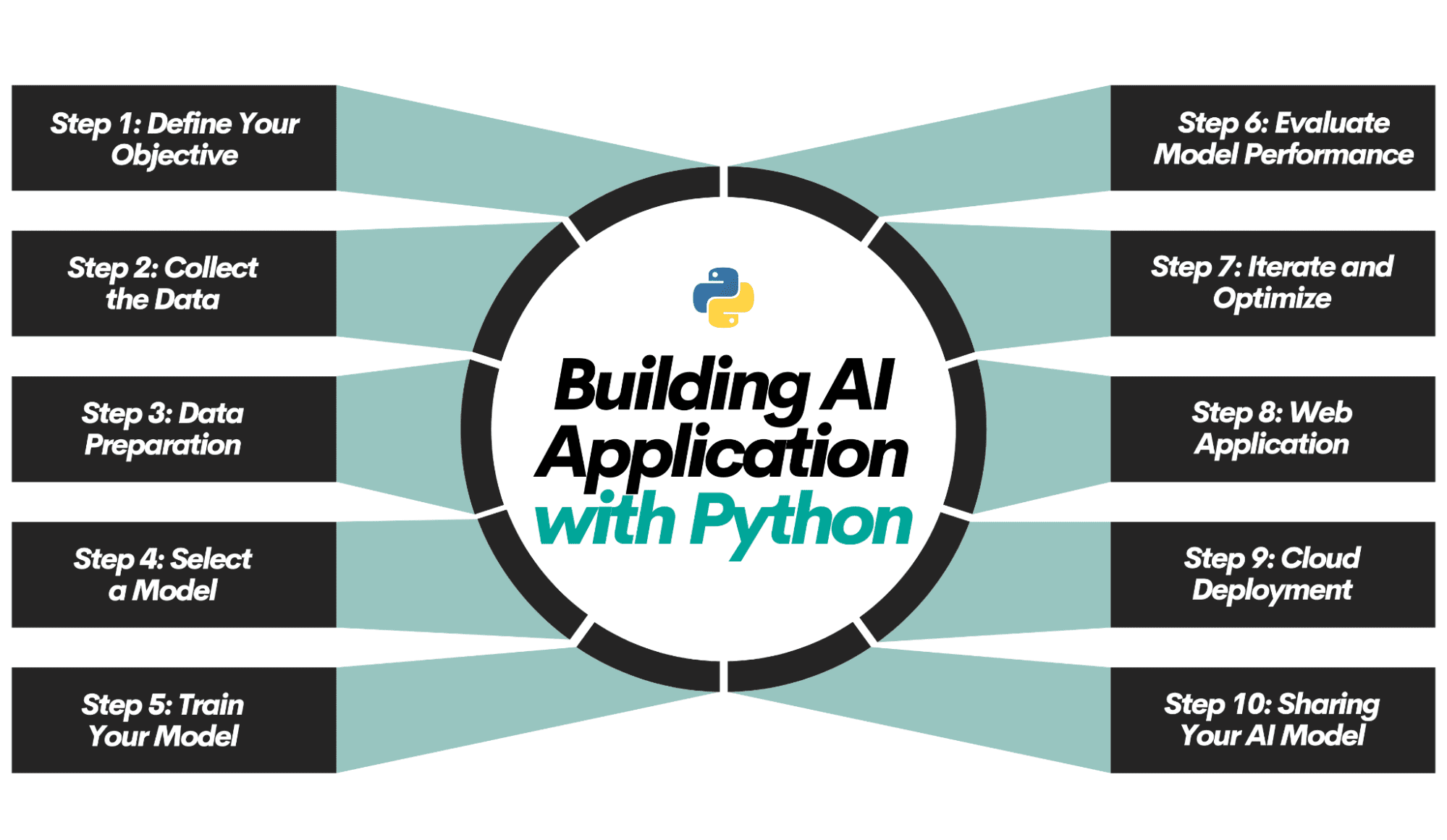

You must follow some steps to distinguish your application from the average one and choose the best options for your project.

This article will explore fundamental steps and options for creating a successful AI Application with Python and other tools.

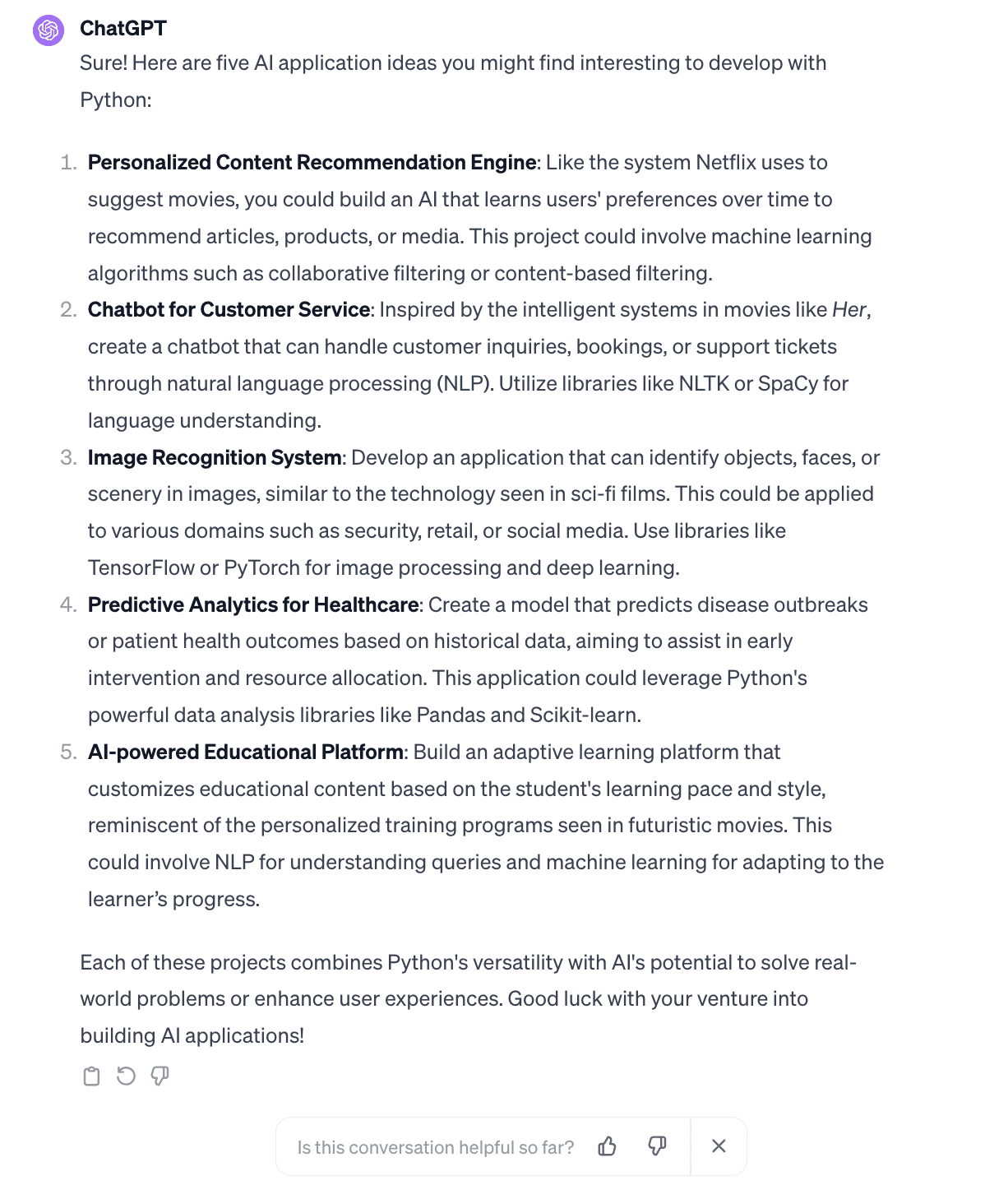

Start by defining the problem you aim to solve with your AI model. This could range from predicting customer behavior to automating a routine task. If you have trouble finding an idea, use ChatGPT or Bard with the following prompt.

Generate 5 ideas about AI Applications that I'll build with Python.

Now, let’s look at ChatGPT’s answer.

Now we have options. The next step will be collecting your data. This step includes sourcing datasets from different repos or finding them using different APIs or web scraping. If you are considering using datasets that are clean and processed, you can use the following resources to collect the dataset:

- GitHub repositories: It is a platform for developers where millions of developers collaborate on projects.

- Kaggle Dataset: A machine learning and data science website that hosts datasets, competitions, and learning resources.

- UCL Irvine Dataset: It is a collection of datasets for machine learning research.

- Google Dataset Search: It is a search engine for datasets that can be used to search by keyword or location. Here is the link.

- AWS Open Data: This program provides access to open data on AWS.

Now you have your goal, and your data is ready. It is time to do your thing. So, the next step will be to prepare your data to apply the model you want. This model can be a machine learning or deep learning model. Either way, there are specific characteristics that your data needs to be:

- Clean: This step will be more complicated if you collect data by doing web scraping or using API. You should remove duplicates, irrelevant entries, correct types, handle missing values, and more using methods like imputation or deletion. https://www.stratascratch.com/blog/data-cleaning-101-avoid-these-5-traps-in-your-data/

- Formatted Properly: Now, to apply your model, features should be consistent and appropriate. If you have categorical data, they need to be encoded to apply machine learning. Your numerical features should be scaled and normalized to have a better model.

- Balanced: Machine Learning needs iteration, which requires steps you must take, like this one. Your dataset should be balanced, which means you have to ensure that the dataset does not favor one class over others to ensure your predictions won’t be biased.

- Feature Engineered: Sometimes, you should adjust your features to increase your model’s performance. You might remove some features that ruin your model’s performance or combine them to improve it. https://www.linkedin.com/posts/stratascratch_feature-selection-for-machine-learning-in-activity-7082376269958418432-iZWb

- Split: If you’re new to Machine Learning and your model performs exceptionally well, be cautious. In machine learning, some models can be too good to be true, indicating an overfitting issue. To address this, one approach is to split your data into training, testing, and sometimes even validation sets.

https://platform.stratascratch.com/technical/2246-overfitting-problem

Okay, at this step, everything is ready to go. Now, which model you’ll apply? Can you guess which one is the best? Or should you think? Of course, you should have an initial suggestion, but one thing you should do is test different models.

You can choose a model from the following Python libraries:

- Sci-kit learn: It is ideal for beginners. You can implement machine learning code with minimal code. Here is the official documentation: https://scikit-learn.org/stable/

- Tensorflow: Tensorflow can be great for scalability and deep learning. It allows you to develop complex models. Here is the official documentation: https://www.tensorflow.org/

- Keras: It runs on top of TensorFlow, making deep learning more straightforward. Here is the official documentation: https://keras.io/

- PyTorch: It is generally preferred for research and development because it is easy to change models on the fly. Here is the official documentation: https://pytorch.org/

Now, it is time to train your model. It involves feeding the data into the model, which allows us to learn from the patterns and adjust its parameters afterward. This step is straightforward.

You have trained your model, but how can you determine if it is good or bad? Of course, there are various methods for assessing different models. Let’s explore a range of model evaluation metrics.

- Regression – MAE measures the average magnitude of errors between predicted and actual values without considering their direction. Also, the R2 score can be used.

- Classification– Precision, recall, and F1 scores evaluate the classification model’s performance.

- Clustering: Evaluation metrics here are less straightforward because we usually need true labels to compare with. However, metrics like the Silhouette Score, Davies-Bouldin Index, and Calinski-Harabasz Index are commonly used.

Based on the result, which was collected from Step 6, there are multiple actions you can take. These actions can affect the performance of your models. Let’s see.

- Tweaking Hyperparameters: Adjusting the hyperparameters of your model can significantly change its performance. It controls the learning process and structure of the model.

- Selecting Different Algorithms: Sometimes, better options might be than your initial model. That’s why exploring different algorithms might be a better idea, even if you are already midway through the process.

- Adding More Data: More data often leads to a better model. Therefore, adding more data would be a wise choice if you need to improve model performance and have a budget for data collection.

- Feature Engineering: Sometimes, the solution to your problem might be out there, waiting for you to discover it. Feature engineering could be the most cost-effective solution.

Your model is ready, but it needs to have an interface. It is now on the Jupyter Notebook or PyCharm but needs a user-friendly front end. To do that, you need to develop a web application, and here are your options.

- Django: It has full features and is scalable but needs to be more beginner-friendly.

- Flask: Flask is a beginner-friendly microweb framework.

- FastAPI: It is a modern and fast way to build Web applications.

Your model could be the best one ever developed. However, you can’t be sure if it stays on your local drive. Sharing your model with the world and going live will be good choices for getting feedback, seeing real impacts, and growing it more efficiently.

To do that, here are your options to do that.

1. AWS: AWS offers a larger scale of applications, with multiple options for each action. For example, for databases, they have options you can choose and scale.

- Heroku: Heroku is a platform as a service that allows developers to build, run, and operate applications entirely in the cloud.

- Pythonanywhere.com: Pythonanywhere is a cloud service for Python-specific applications. It is excellent for beginners.

There are too many ways of sharing your AI model with the world, but let’s discuss one of the famous and easier ones if you like writing.

- Content Marketing: Content marketing involves creating valuable content, such as blog posts or videos, to showcase your AI model’s capabilities and attract potential users. To learn more about effective content marketing strategies, check out this.

- Community Engagement: Online communities like Reddit allow you to share insights about your AI model, build credibility, and connect with potential users.

- Partnership and Collaboration: Partnering with other professionals in the field can help expand the reach of your AI model and access new markets. If you were writing about your app on Medium, try collaborating with the writers who wrote in the same niche.

- Paid Advertising and Promotion: Paid advertising channels, like Google Ads or other social media ads, can help increase visibility and attract users to your AI model.

After finishing all the ten steps listed above, it is time to be consistent and maintain your developed application.

In this article, we went through the ten ultimate steps to build & deploy AI Applications with Python.

Nate Rosidi is a data scientist and in product strategy. He’s also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Nate writes on the latest trends in the career market, gives interview advice, shares data science projects, and covers everything SQL.

Discover more from reviewer4you.com

Subscribe to get the latest posts to your email.