Researchers published a study comparing the accuracy and quality of summaries that LLMs produce. Claude 3 Opus performed particularly well but humans still have the edge.

AI models are extremely useful for summarizing long documents when you don’t have the time or inclination to read them.

The luxury of growing context windows means we get to prompt models with longer documents, which challenges their ability to always get the facts straight in the summary.

The researchers from the University of Massachusetts Amherst, Adobe, the Allen Institute for AI, and Princeton University, published a study that sought to find out how good AI models are at summarizing book-length content (>100k tokens).

FABLES

They selected 26 books published in 2023 and 2024 and had various LLMs summarize the texts. The recent publication dates were chosen to avoid potential data contamination in the models’ original training data.

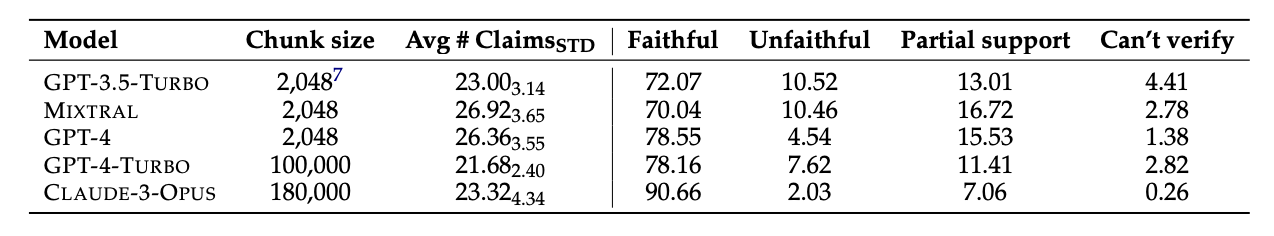

Once the models produced the summaries, they used GPT-4 to extract decontextualized claims from them. The researchers then hired human annotators who had read the books and asked them to fact-check the claims.

The resulting data was compiled into a dataset called “Faithfulness Annotations for Book-Length Summarization” (FABLES). FABLES contains 3,158 claim-level annotations of faithfulness across 26 narrative texts.

The test results showed that Claude 3 Opus was “the most faithful book-length summarizer by a significant margin,” with over 90% of its claims verified as faithful, or accurate.

GPT-4 came a distant second with only 78% of its claims verified as faithful by the human annotators.

The hard part

The models under test all seemed to struggle with the same things. The majority of the facts the models got wrong related to events or states of characters and relationships.

The paper noted that “most of these claims can only be invalidated via multi-hop reasoning over the evidence, highlighting the task‘s complexity and its difference from existing fact-verification settings.”

The LLMs also frequently left out critical information in their summaries. They also over-emphasize content towards the end of books, missing out on important content nearer the beginning.

Will AI replace human annotators?

Human annotators or fact-checkers are expensive. The researchers spent $5,200 to have the human annotators verify the claims in the AI summaries.

Could an AI model have done the job for less? Simple fact retrieval is something Claude 3 is good at, but its performance when verifying claims that require a deeper understanding of the content is less consistent.

When presented with the extracted claims and prompted to verify them, all the AI models fell short of human annotators. They performed particularly badly at identifying unfaithful claims.

Even though Claude 3 Opus was the best claim verifier by some distance, the researchers concluded it “ultimately performs too poorly to be a reliable auto-rater.”

When it comes to understanding the nuances, complex human relationships, plot points, and character motivations in a long narrative, it seems humans still have the edge for now.

Discover more from reviewer4you.com

Subscribe to get the latest posts to your email.