When designers at the toy company Mattel were asked recently to come up with a new Hot Wheels model car, they sought inspiration from DALL∙E 2, an AI system developed by OpenAI that creates custom images and art based on what people describe in plainspoken language.

Using the tool, designers can type in a prompt such as, “A scale model of a classic car” and DALL∙E 2 will generate an image of a toy vintage car, perhaps silver in color and with whitewall tires.

As a next step, the designer could erase the top of the car and then type, “Make it a convertible” and DALL∙E 2 will update the image of the car as a convertible. The designer can keep tweaking the design, asking DALL∙E 2 to try it in pink or blue, with the soft-top on, and on and on.

DALL∙E 2 is coming to Microsoft’s Azure OpenAI Service, by invitation, allowing select Azure AI customers to generate custom images using text or images, the company announced today at Microsoft Ignite, a conference for developers and IT professionals.

The availability of DALL∙E 2 through Azure OpenAI Service provides customers such as Mattel cloud AI infrastructure that blends the cutting-edge innovation of text-to-image generation with the compliance, responsible AI guardrails and certifications that Azure offers, Microsoft says.

The Mattel designers were able to generate dozens of images, each iteration sparking and refining ideas that could help design a final fleshed-out rendering of a new Hot Wheels model car.

“It’s about going, ‘Oh, I didn’t think about that!’” said Carrie Buse, director of product design at Mattel Future Lab in El Segundo, California. She sees the AI technology as a tool to help designers generate more ideas. “Ultimately, quality is the most important thing,” she noted. “But sometimes quantity can help you find the quality.”

Microsoft is also integrating DALL∙E 2 into its consumer apps and services starting with the newly announced Microsoft Designer app, and it will soon be integrated into Image Creator in Microsoft Bing.

The rollout of DALL∙E 2 across Microsoft products and services reflects how the company’s investment in AI research is infusing AI into everything it builds, produces and delivers to help everyone boost productivity and innovation.

Over the last 18 months, we’ve seen this transition in technology from proving that you can do things with AI to mapping it to actual scenarios and processes where it’s useful to the end user.

The trend is the result of nonlinear breakthroughs in AI capabilities achieved by bringing more compute to more data to train richer and more powerful models, according to Eric Boyd, Microsoft corporate vice president for AI Platform.

“The power of the models has crossed this threshold of quality and now they’re useful in more applications,” he said. “The other trend that we’re seeing is all the product developers are thinking through and understanding the ways that they can use AI in their products for both ease of use as well as saying, ‘Oh, I can make my product work better if I use AI.’”

DALL∙E 2 was trained on a supercomputer hosted in Azure that Microsoft built exclusively for OpenAI. The same Azure supercomputer was also used to train OpenAI’s GPT-3 natural language models and Codex, the model that powers GitHub Copilot and certain features in Microsoft Power Apps that run on Azure OpenAI Service. Azure also makes it possible for these AI tools to rapidly generate image, text or code suggestions for a person to review and consider using.

The addition of DALL∙E 2 builds on Microsoft and OpenAI’s ongoing partnership and expands the breadth of use cases within Azure OpenAI Service, the newest in the Azure Cognitive Services family currently in preview, which offers the security, reliability, compliance, data privacy and other enterprise-grade capabilities built into Microsoft Azure.

Other AI technologies developed by Microsoft and available through Azure Cognitive Services such as language translation, speech transcription, optical character recognition and document summarization are showing up in products and services such as Microsoft Teams, Microsoft Power Platform and Microsoft 365.

“Over the last 18 months, we’ve seen this transition in technology from proving that you can do things with AI to mapping it to actual scenarios and processes where it’s useful to the end user,” said Charles Lamanna, Microsoft corporate vice president of business applications and platform. “It’s the productization of these very large language models.”

‘Whenever I get an email from my boss, send a text message to my phone’

These AI capabilities are aimed at eliminating tedious work and enabling employees to focus on higher-value tasks, such as freeing sales associates to engage in conversations with customers without having to take notes, Lamanna said. These new tools can also automate processes that currently eat up hours of people’s workdays such as writing summaries of sales calls and adding them to a client database.

“We can now inject AI that listens to our conversation and helps people be more productive by creating transcripts, capturing action items, doing summarization of the meeting, identifying common phrases or doing analysis about, ‘Am I a good listener?’” said Lamanna. “That required the advancement of the state-of-the-art AI and the advancement of these digital collaboration tools.”

Lamanna is focused on creating tools that enable anyone with a computing device to create their own AI-powered applications using the Microsoft Power Platform. For example, his team is rolling out a feature in Power Automate with AI powered copilot capabilities that allow people to use natural language to build workflow processes that connect various services running in the Microsoft cloud.

“Users in normal language can say, ‘Hey, whenever I get an email from my boss, send a text message to my phone and put a to-do in my Outlook,’” Lamanna explained. “They can just say that, and it gets generated automatically.”

This ability to turn a sentence into a workflow dramatically expands the number of people who can create AI-powered software solutions, he said. People with a touch more technical know-how can further customize and refine their applications with low-code tools and graphical interfaces available in the Power Platform such as the intelligent document processing technology in AI Builder, he added.

A lawyer could use this technology to build a customized application that is triggered whenever a new contract is uploaded to the firm’s SharePoint site. This app could extract key information such as who wrote the contract, the parties involved and the industry sector and then email a summary of the contract with these details to lawyers in the firm who cover the sector or clients.

“That’s kind of magic,” said Lamanna, contrasting this type of AI automated workflow to how such tasks are typically accomplished today. “You check the SharePoint site, open a new file, and skim and try to summarize it to see if you have to do anything with it. AI is getting people out of this monotony and getting computers to do what’s best for them to do anyway.”

Content AI

The digital transformation of the past several years has added to the flood of content that people around the world produce. Microsoft customers, for example, now add about 1.6 billion pieces of content every day to Microsoft 365. Think marketing presentations, contracts, invoices and work orders along with video recordings and transcripts of Teams meetings.

“They’re creating documents, they’re collaborating on them in Teams and they are storing them in SharePoint-powered experiences,” said Jeff Teper, Microsoft president of collaborative apps and platform. “What we want to do is integrate AI technologies with this content so clients can do more structured activities like contract approvals, invoice management and regulatory filings.”

That’s why Microsoft created Microsoft Syntex, a new content AI offering for Microsoft 365 that leverages Azure Cognitive Services and other AI technologies to transform how content is created, processed and discovered. It reads, tags and indexes content – whether digital or paper – making it searchable and available within specific applications or as reusable knowledge. It can also manage the content lifecycle with security and retention settings.

For instance, TaylorMade Golf Company turned to Microsoft Syntex for a comprehensive document management system to organize and secure emails, attachments and other documents for intellectual property and patent filings. At the time, company lawyers manually managed this content, spending hours filing and moving documents to be shared and processed later.

With Microsoft Syntex, these documents are automatically classified, tagged and filtered in a way that’s more secure and makes them easy to find through search instead of needing to dig through a traditional file and folder system. TaylorMade is also exploring ways to use Microsoft Syntex to automatically process orders, receipts and other transactional documents for the accounts payable and finance teams.

Other customers are using Microsoft Syntex for contract management and assembly, noted Teper. While every contract may have unique elements, they are constructed with common clauses around financial terms, change control, timeline and so forth. Rather than write those common clauses from scratch each time, people can use Syntex to assemble them from various documents and then introduce changes.

“They need AI and machine learning to spot, ‘Hey, this paragraph is very different from our standard terms. This could use some extra oversight,’” he said.

“If you’re trying to read a 100-page contract and look for the thing that’s significantly changed, that’s a lot of work versus the AI helping with that,” he added. “And then there’s the workflow around those contracts: Who approves them? Where are they stored? How do you find them later on? There’s a big part of this that’s metadata.”

When DALL∙E 2 gets personal

The availability of DALL∙E 2 in Azure OpenAI Service has sparked a series of explorations at RTL Deutschland, Germany’s largest privately held cross-media company, about how to generate personalized images based on customers’ interests. For example, in RTL’s data, research and AI competence center, data scientists are testing various strategies to enhance the user experience by generative imagery.

RTL Deutschland’s streaming service RTL+ is expanding to offer on-demand access to millions of videos, music albums, podcasts, audiobooks and e-magazines. The platform relies heavily on images to grab people’s attention, said Marc Egger, senior vice president of data products and technology for the RTL data team.

“Even if you have the perfect recommendation, you still don’t know whether the user will click on it because the user is using visual cues to decide whether he or she is interested in consuming something. So artwork is really important, and you have to have the right artwork for the right person,” he said.

Imagine a romcom movie about a professional soccer player who gets transferred to Paris and falls in love with a French sportswriter. A sports fan might be more inclined to check out the movie if there’s an image of a soccer game. Someone who loves romance novels or travel might be more interested in an image of the couple kissing under the Eiffel Tower.

Combining the power of DALL∙E 2 and metadata about what kind of content a user has interacted with in the past offers the potential to offer personalized imagery on a previously inconceivable scale, Egger said.

“If you have millions of users and millions of assets, you have the problem that you simply can’t scale it – the workforce doesn’t exist,” he said. “You would never have enough graphic designers to create all the personalized images you want. So, this is an enabling technology for doing things you would not otherwise be able to do.”

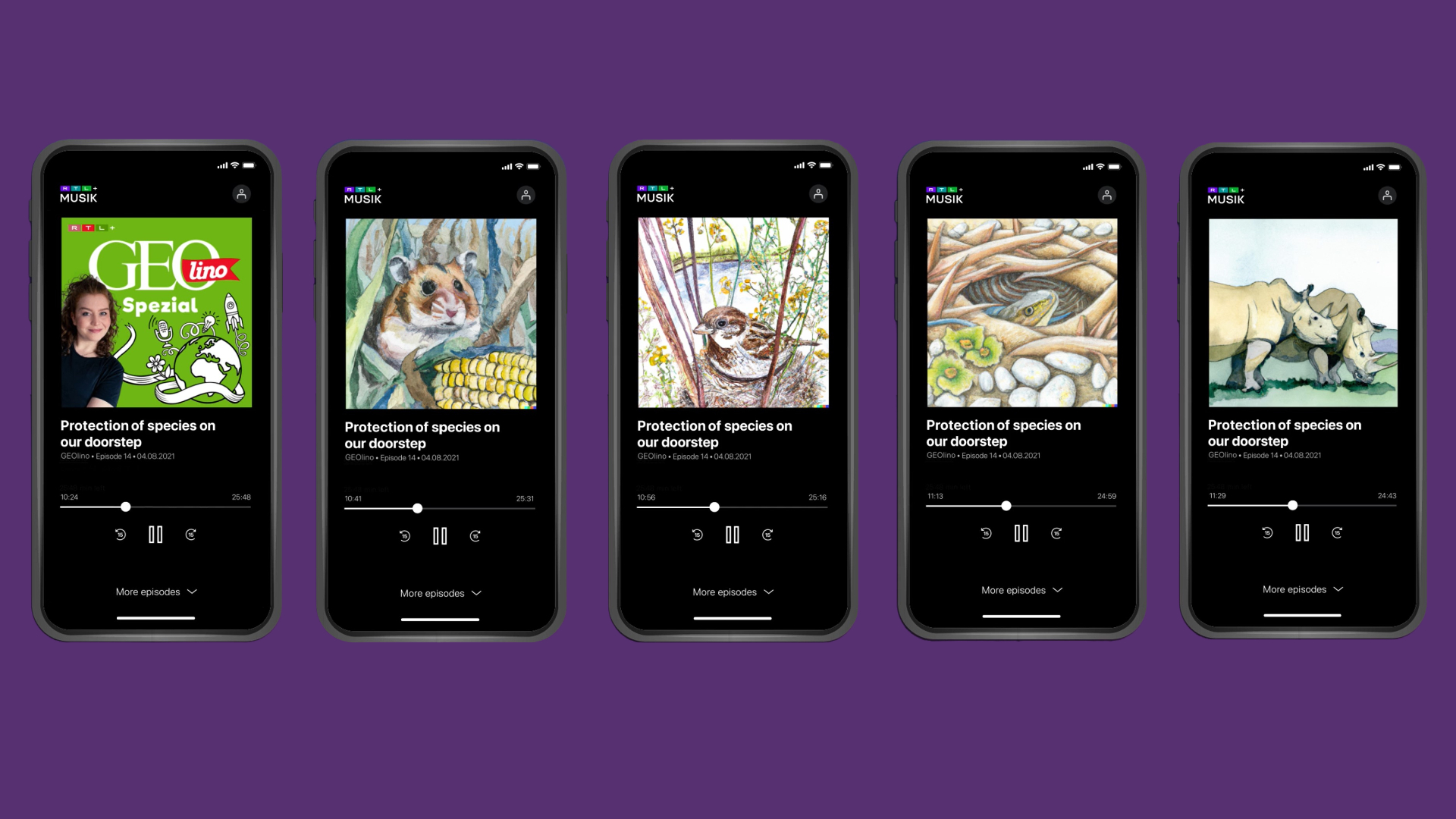

Egger’s team is also considering how to use DALL∙E 2 in Azure OpenAI Service to create visuals for content that currently lacks imagery, such as podcast episodes and scenes in audiobooks. For instance, metadata from a podcast episode could be used to generate a unique image to accompany it, rather than repeating the same generic podcast image over and over.

RTL Deutschland, Germany’s largest privately held crossmedia company, is exploring how to use DALL∙E 2 in Azure OpenAI Service to engage people browsing its streaming service RTL+. One idea is to use DALL∙E 2 to generate unique images to illustrate individual podcast episodes, rather than relying on the same podcast cover art.

Along similar lines, a person who is listening to an audiobook on their phone would typically look at the same book cover art for each chapter. DALL∙E 2 could be used to generate a unique image to accompany each scene in each chapter.

Using DALL∙E 2 through Azure OpenAI Service, Egger added, provides access to other Azure services and tools in one place, which allows his team to work efficiently and seamlessly. “As with all other software-as-a-service products, we can be sure that if we need massive amounts of imagery created by DALL∙E, we are not worried about having it online.”

The appropriate and responsible use of DALL∙E 2

No AI technology has elicited as much excitement as systems such as DALL∙E 2 that can generate images from natural language descriptions, according to Sarah Bird, a Microsoft principal group project manager for Azure AI.

“People love images, and for someone like me who is not visually artistic at all, I’m able to make something much more beautiful than I would ever be able to using other visual tools,” she said of DALL∙E 2. “It’s giving humans a new tool to express themselves creatively and communicate in compelling and fun and engaging ways.”

Her team focuses on the development of tools and techniques that guide people toward the appropriate and responsible use of AI tools such as DALL∙E 2 in Azure AI and that limit their use in ways that could cause harm.

To help prevent DALL∙E 2 from delivering inappropriate outputs in Azure OpenAI Service, OpenAI removed the most explicit sexual and violent content from the dataset used to train the model, and Azure AI deployed filters to reject prompts that violate content policy.

In addition, the team has integrated techniques that prevent DALL∙E 2 from creating images of celebrities as well as objects that are commonly used to try to trick the system into generating sexual or violent content. On the output side, the team has added models that remove AI generated images that appear to contain adult, gore and other types of inappropriate content.

We’re designing the interfaces to help users … use this tool to get the representation they want.

DALL∙E 2 is still subject to a challenge that many AI systems encounter: the system is only as good as the data used to train it. Without the benefit of context that provides insight to user intent, less descriptive prompts to DALL-E 2 can surface biases embedded in the training data – text and images from the internet.

That’s why Bird is working with Microsoft product teams to teach people how to use DALL∙E 2 in ways that help them achieve their goals – such as using more descriptive prompts that help the AI system better understand what results they’re after.

“We’re designing the interfaces to help users be more successful in what it’s generating, and sharing the limitations today, so that users are able to use this tool to get the representation that they want, not whatever average representation exists on the internet,” she said.

‘How do you predict the future?’

Buse recently joined the Mattel Future Lab, which is exploring ideas such as the metaverse and NFTs, or non-fungible tokens, to expand the reach of the toy business. She’s using DALL∙E 2 as a tool to help her imagine what these virtual experiences could look like.

“It’s fun to poke around in here to think about what would come up in a virtual world based on – pick a descriptor – a forest, mermaids, whatever,” she said, explaining that DALL∙E 2 is helping her team predict this future. “How do you predict the future? You keep feeding yourself more information, more imagery and thoughts to try and imagine how this would come together.”

Boyd, the Microsoft corporate vice president for Azure Platform, said DALL∙E 2 and the family of large language models that underpins it are unlocking this creative force across customers. The AI system is fuel for the imagination, enabling users to think of new and interesting ideas and bring them alive in their presentations and documents.

“What is most exciting, I think, is we’re just scratching the surface on the power of these large language models,” he said.

Related:

Top image: Mattel toy designers are investigating how to use images generated by DALL∙E 2 in Azure OpenAI Service to help inspire new Hot Wheels designs. By typing plain language prompts like “A DTM race car like a hot rod” or “A Bonneville salt flats racer like a DTM race car,” they can generate multiple images to help spark creativity and inform final designs.

John Roach writes about Microsoft research and innovation. Follow him on Twitter.

Discover more from reviewer4you.com

Subscribe to get the latest posts to your email.